The observation by

@59FLH is failing for how Doppler Radar ballistic chronographs actually work. ALL of these units 1) measure velocity downrange and back-calculate “muzzle velocity” (actually velocity-at-device) and 2) ping the bullet with multiple pulses which are required to establish an appropriate decay ratio for this calculation.

Doppler Radar ballistic chronographs - in every ping of the bullet, capture data which offers BOTH distance of capture AND velocity of object. The internal chronometer knows when the pulse is transmitted and echo returned, with the time between being used to calculate distance of the object when pinged. The Doppler radar ALSO returns instantaneous velocity of the object by measuring frequency shift of the echo vs. the original signal. The units then record multiple pings (generally expected to be 1300hz ping rate) which returns multiple positions and velocities to allow calculation of a decay ratio which then is used to back-calculate the “velocity at device” which is displayed as muzzle velocity. So “where the radar reads the bullet” is completely irrelevant - unlike optical chronographs which only read the velocity at their physical position, Doppler radars know where they read the velocity, and read multiple positions and velocities to allow back-calculation for muzzle velocity.

I don’t want to overwhelm the thread, but I may not have shared these yet on this site? But here is some evidence from experimentation I have been doing to compare several chronographs on the market:

I’m part way through a relatively large matrix of comparison between several chronographs, and this “offset error” is a recurring problem for each of the 3 Athlon chronographs I have used for testing. It HAS improved since the original release, May and June were kinda ugly with almost EVERY session offering these offset errors, but reducing after the July firmware update to ~1/4-1/3 of sessions, and reducing in magnitude of offset.

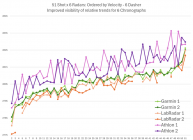

View attachment 1720064

Here is an example of the offset error compared to other brands: the green lines are 2 Garmins, the orange lines are 2 LabRadar LX’s, and the pink lines are 2 Athlons.

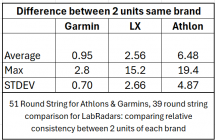

View attachment 1720065

No, with this experiment, I cannot definitively say that one unit is reading the “true velocity” or not, BUT, these tests (multiple iterations of this test so far) DO offer strong indication as to whether 1) one brand/model or another is delivering their promised precision, and/or 2) if one brand/model is doing so, or not, then COULD both units be reading the “true velocity.” Considering promised precision of +/-0.1% for all of these units, for this 2800fps load, the two units of each brand (and really ALL units) should agree with one another within 5.6fps. Not exceeding +/-2.8fps from “truth.” The Garmin units DID deliver that standard for every shot fired, never exceeding 2.8fps spread from one another (potentially +/-0.05% precision), whereas the LabRadars averaged 2.6fps spread from one another with a max of 15.2fps disagreement (fails +/-0.1% specification promise), but the Athlons AVERAGED larger disagreement than their promised spread, averaging 6.5fps disagreement between the 2 units, and a max disagreement of 19.4fps. Using a +/-0.1% specification, this data proves that it is impossible for BOTH Athlons to be reading within their spec from the “true velocity.” Seeing the agreement of the green and orange lines above, we can see the Garmins and LabRadars agreed quite closely, with only a few shots across the LabRadars disagreeing more than promised spec, and NONE of the shots across the Garmins failing that promise.

In other words, both Garmins CAN be correct to true velocity, because they agree sufficiently within their spec that if one is right, the other also could be right. However, the Athlons both CANNOT be reading the true velocity on average, because if one is correctly reading within the promised 0.1%, the other is too far away to also be reading within that margin from truth.

View attachment 1720074

I have multiple replicants of these tests, and the same results persist - high or low offset disagreements by the Athlons, and higher volatility which makes it impossible for the Athlons to be considered to be delivering its promised spec of +/-0.1% precision to truth.

I’m happy to answer any questions I can, and to include additional expanded scope into this matrix of comparison.