Actually, no, you can't. Volume has a huge effect on everything that happens to the bullet. Ballisticians don't distinguish between where a case volume changes, they just want to know how much volume the case has. The chart I posted shows that there is no relationship between weight and volume, there is no reason to guess as to whether there is a relationship. Even with 10 data points you can see that as the weight increased the volume is random, it jumps all over the place. You can't say that if my case weight increases by a tenth of a grain then my volume will increase by X amount.

I'll try to explain in a coherent manner why I respectfully disagree with some of the claims in your statement.

Under the typical peak internal pressures of 50-60K psi that occur in centerfire cartridges, the brass case undergoes plastic deformation and adopts a conformation that closely matches the chamber in which it was fired. In fact, fired cases are generally extremely uniform in their external dimensions, often as uniform as in prepped (resized) brass. Because brass has a density of about 8.5 times greater than water, as the internal volume of a case increases, it's relative weight will decrease. This is simple physics. So what are the possible sources of error that could lead to the internal volume of cases NOT being proportional to their weight?

1) The first major assumption in using case weight as a surrogate method for estimating case volume is that the density of brass within a single Lot # of cases is uniform. If you do not believe the density of brass within a single Lot # is uniform, we can agree to disagree on everything else and there is little point in reading any further. I would not make such a claim for the density of brass from different Lot#s (or from different manufacturers). Comparing brass by weight from different Lot #s, or different manufacturers, may be comparing apples to oranges.

2)

If all external dimensions of two hollow objects are identical, and they are composed of material of identical density, then there WILL be a proportional relationship between their internal volumes and their weights. This is simple physics and not subject to "interpretation". So the main question becomes, what could be the cause any possible discrepancy in the exterior dimensions of two cases fired in the same chamber? There are two most likely possibilities:

A) The region around the bottom of the case that includes the primer pocket, the extractor groove, and various other beveled surfaces, is the part of the case that DOES NOT expand to closely fit the external dimensions of the chamber. Some of these dimensions, such as casehead diameter may differ slightly, and to some extent do undergo deformation under sufficient pressure. However, they can effectively be removed from consideration by the simple physical constraints they are subject to. For example, If the diameter of the casehead grows too large, it will cause difficulty in chambering that case. Along the same line, if the diameter of the primer pocket itself is too large, or too small, primers will not fit properly. Further, significant changes in the depth of the primer pocket would also be obvious, because a uniforming tool would only work in some cases, but not others. So in general, we have a number of simple observations that allow us to conclude whether the outer dimensions of the casehead and primer pocket regions of the brass have been significantly altered.

The obvious remaining culprit in this region of the case is the extractor groove. Certainly, non-uniformity in the extractor groove dimensions between two cases with otherwise identical external dimensions would directly cause a change in case weight

without affecting case volume. In fact, I do believe that variance in dimensions of the extractor groove is likely to be at least part of the reason for the existence of "outliers", where case volume lies off the trend line for case weight versus case volume. I have examined the extractor grooves on many a case from a single Lot # and I can tell you simply by eye that whatever variance there may be between cases, it is equivalent to only a very small fraction of the total internal volume of the case. Therefore, there would need to be a large relative difference in the size of the extractor grooves of two cases to account for any significant variance between their case weight to case volume ratios. Nonetheless, I acknowledge that this is a likely source of the observed variance between the two values.

B) The second most obvious way in which the outer dimensions of two cases that were fired in the same chamber could differ significantly would be some sort of stress or damage that occurred

after the round was ejected from the chamber. In fact, various types of damage such as flat-spotting of necks or dings to the shoulder are quite common with strong ejector springs.

Anything that changes external dimensions of a case without altering the mass of brass in it will cause it to move off a trend line for a graph of case weight versus case volume. Squeezing a case with pliers as I mentioned in an earlier post would be an extreme example of this phenomena. The key here is that it is critical to closely examine cases for which you are determining case volume, whether directly with water, or by weight comparison, because changes in the external dimensions of the case will affect the outcome regardless of which approach you use. Simple quality control approaches for any cases that appear to be far off the trend line are simple visual inspection and/or direct measurement of case body, neck, shoulder, etc., external dimensions.

These are my arguments to support the notion that

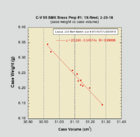

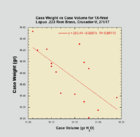

within reasonable limits, case weight should be proportional to case volume. As a demonstration that, in fact, empirical evidence also supports this notion, I have attached two examples of case weight versus case volume for two fairly recent brass preps in one of my rifles. Every time I start in with a new prep of virgin brass, I carry out this analysis. As a result, I have determined case weight and respective case volume for thousands of cases over the years, usually in small groups such as these. I'd much rather have a single data set for several hundred cases all determined at the same time, but frankly, I'm way too lazy to do that many all at once. These two files might be considered as representing approximately the best (top graph) and worst (bottom graph) case scenarios with respect to the trend lines I typically obtain from this kind of analysis. The top graph is about the best group of cases I have ever seen, the bottom is a little worse (more outliers) than average. Both are relatively small numbers of cases from a statistical point of view (n=10, n=15). There are some minor differences such as the use of different units for the x- and y-axes, which do not effectively alter the relative trend lines in terms of regression analysis. Nonetheless, there are two critical take-home messages from these graphs. The first is that in both cases, the trend line shows a clear negative slope, meaning that as

case weight decreases, case volume increases. The second is that the coefficients of correlation (r) are both positive, and much closer to 1.0 than zero. Thus, regression analysis also supports a strong linear association between the x and y variables (case weight and case volume).

I have spent the better part of my adult life in a laboratory, routinely measuring and analyzing extremely small quantities of liquids and solids. I know something about what it takes to do this properly, and with good precision. My gut feeling is that in many cases, people having difficulty quantifying case volume with water are either using measuring equipment that is not appropriate, or their technique is lacking. For example, it is very easy to leave a bubble inside the case, which will cause a significant error in the determined water volume. In addition to the variance in case weight that should be independent of case volume (i.e. variance in the extractor groove), I also believe that error in water volume determination may be part of the reason why not everyone that compares case weight to case volume observes trend lines such as I illustrated here. As I mentioned above, I have routinely carried out this simple analysis with almost every single prep of virgin brass I have done over the years. I have yet to observe a single occasion in which there was not a clear negatively sloped trend line for a graph of case weight versus case volume.

For what it is worth, I do not use the case weight and trend line equation to "estimate" case volume from such a graph. It is simply part of the characterization process for a new brass prep. Because of the existence of occasional outliers that clearly are well away from the trend line, I believe trying to estimate case weight from the graph is not a useful endeavor. As I stated in an earlier post, I use measured case weight as a relatively simple approach to improve consistency of case volume, without having to actually determine case volume with water, which I find to be somewhat of a PITA. I use case weight to sort cases into sub-groups, which I believe are more uniform with respect to volume than if I had done nothing at all. That is my only claim. My arguments as to why this is a valid approach, and data supporting those arguments is presented above. As always, YMMV.