You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Temperature Insensitivity of Varget, IMR 4166, IMR 4064, N140 for .308 F-TR

- Thread starter BillC79

- Start date

Mark W

Gold $$ Contributor

We all wish we didn't get variation in our loads with temperatures. That would be a perfect world.

What I gleaned from the test is viable options for existing loads when our go to powders are some what non existent.

Exactly!! I think some of us may be getting grumpy from being shut in too long.

CharlieNC

Gold $$ Contributor

Thanks for the suggestion of n=10. Do you have personal experience or publication data that you can post or cite to support your suggestion that 10 replicates will provide substantially better information than 5 at each temperature and powder point? I would need something more than a preconceived notion to justify the time and expense.

As the next test design is evolving, it appears that it will be n=5 between Varget and IMR 4166 at roughly 10 degree intervals between 60 and 100°F. I’ll use my Autotrickler to more precisely dispense powder charges. Increasing replicates from 3 to 5 and more precise powder measurement should significantly reduce error of the mean and permit greater confidence in the data.

There are several fundamental aspects concerning the use of the standard deviation (SD) for the normal distribution in statistics. It is well known that the avg +/- 1SD contains 68% of the observations, +/- 2SD contains 95%, and so on. But in this case, you are not concerned with the individual results but the behavior of the averages for which you want to explain vs temperature. So you need enough data to obtain a "good" average as the inputs, through which you can fit a smooth curve (or straight line). How much data to get a solid average? If you "practice" statistics another well known principle is SD(average) = SD(individuals) / Sqrt(n), which tells you how much the larger sample size will reduce the SD of the averages. As an example lets assume the velocity SD=6 for the Varget ( that is the average SD for the different temps based on 3 shots each). For 4 shots SD(avg) = 6/sqrt(4) = 6/2=3; so if the average was 2550 you would be 68% confident that the real average is within 2550 +/-3. This is what the error bars which are automatically plotted are telling you, and you can see that these overlap between adjacent temperatures; meaning the average can be anywhere within that bracket. So you want to reduce the SD(average) such that the error bars are tighter, to the extent necessary to separate the signal (temperature effect) from the noise (the SD). I proposed 10 as a reasonable sample size to achieve a significant improvement, and using the formula shown above it can be determined for any sample size to find the point of diminishing returns. This is a rather short explanation of a very powerful principle!

OKBoomer

Spencer Aycock

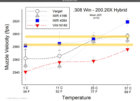

First off, thanks for the research. I’m a data guy too that is chasing the same questions in my FTR rifle. What I see in the chart is that if you want to stay within the rifles accuracy node range of about 2584 to 2596fps, then you would want to shoot IMR4064 up to about 77°F, then switch to IMR4166 for the hot weather. You pretty well concluded that, but I wonder about a rifles tendency to produce a shallower trend in velocity within a node range despite a graduated pressure increase due to powder charge weight, or in this instance temperature.

I added the yellow highlight lines to your chart at where I see the upper and lower limits of the node range.

I added the yellow highlight lines to your chart at where I see the upper and lower limits of the node range.

BillC79

Gold $$ Contributor

Thank you for the descriptive statistics primer on standard deviation and the value of increasing sample size to reduce standard deviation and to increase the confidence that the calculated mean is close to the parametric mean. It is simple to see that 10 samples would lead to a smaller standard deviation than 5 samples and that 20 samples would lead to a smaller standard deviation than 10 samples. One hundred samples would even be better in reducing standard deviation.There are several fundamental aspects concerning the use of the standard deviation (SD) for the normal distribution in statistics. It is well known that the avg +/- 1SD contains 68% of the observations, +/- 2SD contains 95%, and so on. But in this case, you are not concerned with the individual results but the behavior of the averages for which you want to explain vs temperature. So you need enough data to obtain a "good" average as the inputs, through which you can fit a smooth curve (or straight line). How much data to get a solid average? If you "practice" statistics another well known principle is SD(average) = SD(individuals) / Sqrt(n), which tells you how much the larger sample size will reduce the SD of the averages. As an example lets assume the velocity SD=6 for the Varget ( that is the average SD for the different temps based on 3 shots each). For 4 shots SD(avg) = 6/sqrt(4) = 6/2=3; so if the average was 2550 you would be 68% confident that the real average is within 2550 +/-3. This is what the error bars which are automatically plotted are telling you, and you can see that these overlap between adjacent temperatures; meaning the average can be anywhere within that bracket. So you want to reduce the SD(average) such that the error bars are tighter, to the extent necessary to separate the signal (temperature effect) from the noise (the SD). I proposed 10 as a reasonable sample size to achieve a significant improvement, and using the formula shown above it can be determined for any sample size to find the point of diminishing returns. This is a rather short explanation of a very powerful principle!

It is important to know what question I am attempting to answer with all of this shooting and velocity measuring at different temperatures. I want to know if the slopes of the temperature vs velocity data points that form a line differ for Varget and IMR 4166. Put simply, does one line point upward more steeply than another indicating a differing degree of temperature insensitivity. It will help to keep the standard deviation at a small enough level to be confident that the means forming a line are relatively accurate. For most of us, that will be a subjective eyeball assessment. The slopes of the two lines for Varget and IMR 4166 could be compared by analysis of covariance and tested for statistical significance. A power analysis could be completed to determine what the sample size needs to be (e.g., 34) to establish 0.05 for alpha and 0.80 for power.

I’m not going there.

The easiest way to decrease variability that contributes as a major factor to the magnitude of standard deviation based on what I have done so far is to decrease the variability of the powder charge per cartridge. I’m going there with n=5. Once I have that mean ± SD data for n=5 plotted, I’ll be better able to assess the value of increasing the sample size to answer the question that I am asking.

BillC79

Gold $$ Contributor

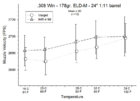

Attached is the preliminary data from test 2 with 5-6 replicates per data point. I switched over to another rifle and bullet combination because of supplies that I had on hand. The powder loads are all estimated to be slightly compressed (4.2 to 5.7%).

Based on the data so far, I wouldn’t hesitate to use IMR 4064 instead of Varget from 34 to 68°F. It looks like IMR 4166 may be as comparably insensitive to temperature-induced velocity changes between 61 and 102°F as Varget. However, variation in the data between these temperature points remains high and warrants an increase in replicates from 6 to 12 to try to get better resolution. It also looks like another data point in the 95°F area would be informative.

Information on methods and rationale for study 2 design is available on the web page.

https://wlcastleman.com/equip/shoot/varget/index.htm

Thanks for your ongoing comments and for suggestions for improvement. Additional results will be added as I am able to safely access the range during the COVID-19 restrictions.

Based on the data so far, I wouldn’t hesitate to use IMR 4064 instead of Varget from 34 to 68°F. It looks like IMR 4166 may be as comparably insensitive to temperature-induced velocity changes between 61 and 102°F as Varget. However, variation in the data between these temperature points remains high and warrants an increase in replicates from 6 to 12 to try to get better resolution. It also looks like another data point in the 95°F area would be informative.

Information on methods and rationale for study 2 design is available on the web page.

https://wlcastleman.com/equip/shoot/varget/index.htm

Thanks for your ongoing comments and for suggestions for improvement. Additional results will be added as I am able to safely access the range during the COVID-19 restrictions.

BillC79

Gold $$ Contributor

The details you seek are on the preceding cited web page. It was a 30 inch 1:10 twist barrel.@BillC79 , for curiosity, what loads in grains you were using on the original graph with the 200-20X bullet? And what barrel lenght and twist?

And thank you for posting your findings!

LRCampos.

I was able to get to the range this A.M. and get the remaining data I needed. Details should be posted by this evening.

The details you seek are on the preceding cited web page. It was a 30 inch 1:10 twist barrel.

I was able to get to the range this A.M. and get the remaining data I needed. Details should be posted by this evening.

Thank you very much!

LRCampos.

Looking forward to the new report,thanks.The details you seek are on the preceding cited web page. It was a 30 inch 1:10 twist barrel.

I was able to get to the range this A.M. and get the remaining data I needed. Details should be posted by this evening.

...or fear those seeking knowledge as they pass by you. Some folks already have the target part figured out.Fear the one who has no chronograph nor bore scope. For all he knows is what the target shows...

BillC79

Gold $$ Contributor

The next compilation of data for IMR 4166 and Varget with 12 replicates per temperature is in the graph below.

The website is being updated. In the current draft under revision, the graph is summarized as:

"The rates of velocity-induced temperature increase for IMR 4166 and Varget are very similar between 61°F and 103°F. From mean data depicted in the graph, the rate increase for IMR 4166 is 0.32 FPS per degree Fahrenheit increase, whereas the rate increase for Varget is 0.46 FPS per degree Fahrenheit. While the value is lower for IMR 4166, it is unlikely to be statistically different based on the high standard deviations."

The current conclusions draft under revision is:

"The primary goal of these studies was to identify powders for .308 Win F-TR rifles that had similar insensitivities to temperature-induced increases in velocity as Varget. IMR 4064, IMR 4166 and Vihtovuori N140 were tested between 34°F and 102°F. N140 had similar temperature insensitivity to that of Varget across the full temperature spectrum. However, N140 was estimated to be of limited value in F-TR rifles shooting 200-plus grain bullets given the limited case volume and velocity needed to be competitive at 1000-yard shooting distances. IMR 4064 had very similar temperature insensitivity to that of Varget between 34 F and 68 F in the tests. IMR 4166 had very similar performance to Varget between 61 F and 103 F."

Comments, corrections, arguments and suggestions for improvement are appreciated.

The website is being updated. In the current draft under revision, the graph is summarized as:

"The rates of velocity-induced temperature increase for IMR 4166 and Varget are very similar between 61°F and 103°F. From mean data depicted in the graph, the rate increase for IMR 4166 is 0.32 FPS per degree Fahrenheit increase, whereas the rate increase for Varget is 0.46 FPS per degree Fahrenheit. While the value is lower for IMR 4166, it is unlikely to be statistically different based on the high standard deviations."

The current conclusions draft under revision is:

"The primary goal of these studies was to identify powders for .308 Win F-TR rifles that had similar insensitivities to temperature-induced increases in velocity as Varget. IMR 4064, IMR 4166 and Vihtovuori N140 were tested between 34°F and 102°F. N140 had similar temperature insensitivity to that of Varget across the full temperature spectrum. However, N140 was estimated to be of limited value in F-TR rifles shooting 200-plus grain bullets given the limited case volume and velocity needed to be competitive at 1000-yard shooting distances. IMR 4064 had very similar temperature insensitivity to that of Varget between 34 F and 68 F in the tests. IMR 4166 had very similar performance to Varget between 61 F and 103 F."

Comments, corrections, arguments and suggestions for improvement are appreciated.

Similar threads

- Replies

- 8

- Views

- 2,698

- Replies

- 17

- Views

- 2,737

- Replies

- 11

- Views

- 3,167

Upgrades & Donations

This Forum's expenses are primarily paid by member contributions. You can upgrade your Forum membership in seconds. Gold and Silver members get unlimited FREE classifieds for one year. Gold members can upload custom avatars.

Click Upgrade Membership Button ABOVE to get Gold or Silver Status.

You can also donate any amount, large or small, with the button below. Include your Forum Name in the PayPal Notes field.

To DONATE by CHECK, or make a recurring donation, CLICK HERE to learn how.

Click Upgrade Membership Button ABOVE to get Gold or Silver Status.

You can also donate any amount, large or small, with the button below. Include your Forum Name in the PayPal Notes field.

To DONATE by CHECK, or make a recurring donation, CLICK HERE to learn how.