Not to sound too critical of their paper, but when I was teaching or peer reviewing papers, they would have been sent packing. If this was a professional environment, their next review would not go well either.

TLDR: The effort was doomed from the start since they didn't have enough shooting sessions or replicates planned to cover the issues such that their use of stats is so weak you didn't need to run fancy math. Then, they jump off the rails with their conclusion that their results or methods would apply to anything.

It is one thing to let a few grammatical errors slip past, but it is another to let the paper go while mixing up their units on the most important results of the work.

If they can't be trusted to keep mm and inches straight on the most important graph in the paper, how do you trust them for the rest of the design of experiment or statistical validity work?

The idea of senior design projects is to raise a student to become a scientist/engineer, but then shouldn't the design of the experiment and the testing methods be part of the grade? How many replicates it takes to support the hypothesis becomes the responsibility of the student and the advisors. They cannot print money, but if the paper is missing the main points it is within their scope.

Show me that a two factor test you claim is unique and works, or if the results of the work didn't turn out as predicted show us why and tell us what the next steps should be in an ideal world where you had improved methods or budgets.

In other words, if the idea is to make the grade of Captain, and there isn't enough fuel in the tanks to make it across to the next port, should we even launch the ship? Or, should we stop and teach them you don't run weak test plans and then try to draw conclusions with stats when the idea is they are supposed to know how to design and run testing and when the math is meaningful or just another example of the abuse of statistics.

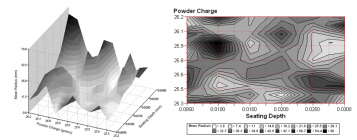

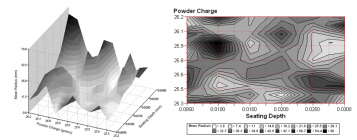

Notice the Seating Depth dimension shown as (mm) on the contour plot? Those should be inches not mm and it is only the single most important part of the paper. Laymen can spot that mistake, but only scientists can state the effort to use design of experiment and the use of statistics here was very weak for a senior.

They admit some fairly serious issues with their shooting sessions. They mentioned cleaning every 20 rounds but say they culled a significant number of shots due to human errors as well as suspect that bullets tumbled due to copper fouling. There was no real effort to show the windage contribution by analyzing the dimensions? How big is the effect of wind on the shots over the two day session while not using flags?

Can the target data be analyzed to determine the number of shots required to ignore the wind? What would the above plots look like if we added the statistical uncertainty to them?

How about just one side by side of the best and worst part of those plots as evidence that their method yielded anything useful?

Doesn't it make sense to demonstrate that all this effort paid off by showing us a 10 shot group of the 25.9 gr- 0.028 seating depth versus 25.9 gr - 0.010 seating depth?

Not once are there any words at all on the concept of "would these results reflect any bullet/chamber/barrel, or just this one, and why?" and that is a serious experimental planning error if the claim is to demonstrate a useful test method.

If this is a novel paper, should we be able to apply it to the next test? Would we do things the same way or would there be changes? Is there anything in the paper that the forum would use to find the best combination of charge and depth, or is the conclusion in the paper valid?

How does one take this example and use it next? Should this paper propose the improvements to get the test design to be reliable? Or do we just say that charge and depth can be covered by these same procedures the next time?

Here I don't blame the student for not having the budget, but it is where I would fail them for not pointing out how to fix this method for the next time.

To design a factorial experiment in completely new territory, I don't blame folks for taking risks and getting burned. They should share their failures and ideas for what they do next if given the chance. That said, there isn't anything new here, and in fact the noise in the results are expected based on the number of what are called the covariates table. Do we use 55 gr VMax in a Savage factory barrel with this many shots to prove or disprove a test method? Should we all copy this method or plan for something else?

The noise caused by all the factors in their table is their responsibility during the planning, and that should also imply planning for enough tests to add validity to the test design. Here I would have stopped them in their tracks.

Go back to your desk and tell me the budget for pulling the effect of charge-seating depth while using these materials. How many shots and replicated tests does it take to draw conclusions while ignoring the winds, the brass, the cleaning, etc. Was the step size good, the number of samples, or should it be changed, why?

The copper fouling gets mentioned, yet they also failed to tie it into the bigger picture with respect to the effect on the whole project and what it would take to actually draw any conclusions. How does one explain keyhole evidence? Can we draw any conclusions on the other shots when we see keyhole shots? Why not show the keyhole shots with respect to the cleaning intervals and where they occur? Do they affect the conclusions? Should the experimental method be altered?

Their actual statistics results are noisy and weak, I wonder if anyone qualified checked their work? In the end, there were 380 shots to find a combination of 25.9 grains and seating depth of roughly 0.0275" using what they called a "police grade" rifle, otherwise shown as a Savage 10FLP in 223, using Hornady 55 grain VMax bullets.

In order for their use of the lot as a blocking factor, and with all those covariates, they would have had to run the total test several more times to have any validity for their method, then add in enough testing on a whole different rifle/bullet combination in order to draw any conclusions on the applicability of these results or method to anything else.

In other words, as scientists they should have known better up front. After all, we have to assume this paper was part of some class or gradation requirement where we assume the goal is to create engineers and scientists who do more than just drop statistical buzz words and make plots that don't show the statistical uncertainty of the results.

For folks who are expected to know design of experiment, this error is similar to folks with no background calculating Standard Deviations on 3 shots and claiming to have done statistics to validate their conclusions. If you read the paper, don't expect to learn anything about shooting, reloading, proper use of statistics, or a method to find the best seating depth.

The student shows potential, but there is also a danger in the tone of the paper. Rather than boast that the effort is the only known test or statistically designed test method, they missed the opportunity to propose the critical corrections to the work and set up the future work to actually be able to draw any reliable conclusions.

Does the next guy use this method as is? Is a copy of this test method reliable for finding the best charge-seating depth? Would those contour plots be expected to repeat for even this rifle and load?

That a skinny budget cannot cure cancer is forgivable, but if the point is to raise engineering managers and scientists who are capable of honesty in their proposals and the capability to estimate how much sample size it takes to draw conclusions that will repeat for some other lab, then here we have failed.

I apologize for the critique of the paper, but in reality it is not a good example for the effort spent. I realize they probably spent their own money and had no support for a more extensive study, but using this topic as the stage for an experimental report was likely his choice. The advisors should have rejected the proposal when the number of tests and shots could not possibly have covered the requirements for all the statistics and experimental designs or to be used to draw the conclusions he made. YMMV