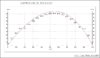

Two shooting friends and I were discussing the strong winds at a recent 600 yard F-Class match. The wind flags were on high poles (35 feet?) but my lane on the far left side of the course was protected by a row of tall trees. The grass indicated a much weaker wind at low altitudes with some wind reversal relative to the wind flags. I commented that my bullets never got up into the strong winds since the maximum arc half way to the target was only 28" above the line of sight.

They objected and everyone immediately pulled out their smart phones and opened their favorite ballistics app. They claimed that I MUST be wrong. Surely my bullets fly higher than 28 inches above my line of sight. They argued since if I were to zero my rifle at 100 yards and then aim at the X at 600 yards, the bullets would hit almost exactly 6 feet low. In other words the "drop", or the "come ups" as we rednecks say, is right around 6 feet at 600 yards.

That much is true according to everyone's ballistics apps. Everyone argued that if the "drop" at 600 yards is 6 feet, that in order for the bullet to hit the X at 600 yards it must arc up roughly 6 feet above the line of sight. I must admit that that doesn't sound obviously wrong at first blush.

My app is was the only one which showed the bullet path at various ranges, both in a chart and as a graphic display of the arc, but they weren't convinced, insisting that my app is simply wrong even though the apps they were using showed only the "drop"; i.e. the required scope adjustment. These guys are experienced shooters and for many years they have been believing that the "drop" is not only how much they must adjust their scopes, but also an indication of how high the bullet arcs above the line of sight for medium/long range target shooting.

The manual calculation for this is a little bit tedious and not at all intuitive (except to math majors) since it involves launch-angle tangents and a cosine function raised to the second power in the denominator. I've studied enough math and engineering to pound through it and prove that my app is correct; i.e. the actual apogee of a bullet hitting the 600 yard X is much less than what most shooters refer to as "drop" required to adjust the POA from a 100 yd zero to the required scope setting at 600 yards.

However, my shooting pals aren't much interested in talking about tangents and cosines, especially while drinking beer. I confess I was at a loss to explain this in simple every-day terms sufficient to convince my pals why the top of the arc of a hit on the X was much less than the "drop" at 600 yards. I just couldn't think of any simple analogy, like throwing baseballs, which made my argument easy to understand.

How can I explain a ballistic arc to people if their educational background consisted mostly Sex, Drugs, and Rock-N-Roll?

They objected and everyone immediately pulled out their smart phones and opened their favorite ballistics app. They claimed that I MUST be wrong. Surely my bullets fly higher than 28 inches above my line of sight. They argued since if I were to zero my rifle at 100 yards and then aim at the X at 600 yards, the bullets would hit almost exactly 6 feet low. In other words the "drop", or the "come ups" as we rednecks say, is right around 6 feet at 600 yards.

That much is true according to everyone's ballistics apps. Everyone argued that if the "drop" at 600 yards is 6 feet, that in order for the bullet to hit the X at 600 yards it must arc up roughly 6 feet above the line of sight. I must admit that that doesn't sound obviously wrong at first blush.

My app is was the only one which showed the bullet path at various ranges, both in a chart and as a graphic display of the arc, but they weren't convinced, insisting that my app is simply wrong even though the apps they were using showed only the "drop"; i.e. the required scope adjustment. These guys are experienced shooters and for many years they have been believing that the "drop" is not only how much they must adjust their scopes, but also an indication of how high the bullet arcs above the line of sight for medium/long range target shooting.

The manual calculation for this is a little bit tedious and not at all intuitive (except to math majors) since it involves launch-angle tangents and a cosine function raised to the second power in the denominator. I've studied enough math and engineering to pound through it and prove that my app is correct; i.e. the actual apogee of a bullet hitting the 600 yard X is much less than what most shooters refer to as "drop" required to adjust the POA from a 100 yd zero to the required scope setting at 600 yards.

However, my shooting pals aren't much interested in talking about tangents and cosines, especially while drinking beer. I confess I was at a loss to explain this in simple every-day terms sufficient to convince my pals why the top of the arc of a hit on the X was much less than the "drop" at 600 yards. I just couldn't think of any simple analogy, like throwing baseballs, which made my argument easy to understand.

How can I explain a ballistic arc to people if their educational background consisted mostly Sex, Drugs, and Rock-N-Roll?

Photoshop path

Photoshop path